Software Testing Process: Best Practices

by Alexey Onyshchenko and Yosyf Manucharian

Introduction

A well-designed software testing process is an important step toward building a high-quality product; it also helps to save money in the long run, minimize time to market, satisfy the end-users, attract new users, and retain the old ones.

But how do you properly build a software testing process in your company or on a project so that it brings benefits?

Based on our experience, we decided to write a guide, sharing our tips on how to effectively build your software testing process. We’ll talk about the best practices that we use at Waverley, what is important to remember, and what is best to use on projects.

To set up a good, proper Software Testing process on the project, we should first understand the concepts that would influence the final result:

- What is Quality Assurance (QA) / Quality Control (QC) / Testing?

- What is the deployment environment and how will it be used?

- Compatibility testing and its importance in the Software Testing Process

- Levels of testing

- How do you organize a Software testing process within a sprint?

- Test documentation and ways to store it

Let’s start from the most basic concept – Quality Assurance.

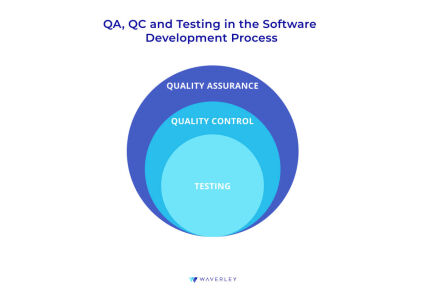

What is Quality Assurance?

One of the main characteristics of the product is quality, and low quality can cause a lot of issues for the product on the market. That’s why it is very important to have an efficient Quality Assurance (QA) process in the software development lifecycle.

Quality Assurance is a process that prevents issues in the future by conducting a series of actions now. In other words, QA is about creating a process that makes sure products are of high quality in an effective and efficient way.

There are a lot of different procedures performed to assure quality, such as:

- Audits

- Selection of the appropriate software testing tools

- Testing team training

- Testing process set up

- Review of the test documentation

- Participation in requirements analysis, etc.

Quality Control (QC) is a part of QA and it is mostly focused not on processes, but on the product itself. The main goal of the QC is to determine that the system being tested functions the way it is intended. QC Engineers detect bugs by testing a product against the requirements that are ultimately fixed. This allows improving the product quality by finding and eliminating issues in the software that otherwise would be found by the end-user. QC also allows us to find out what the current product quality level is and share this information with stakeholders, so that the appropriate measures could be taken.

A standard set of QC activities is the following:

- Software manual or automation testing

- Bug reporting

- Bug fixes verification

- Creation and support of test documentation, e.g. test cases and checklists

To summarize, having the Quality Assurance process on the project is an integral part of a well-established software development process and of a good, high-quality product, but also it:

- Saves time, as the QA process eliminates error occurrence at the very beginning, and fixes issues at the early stages of the process, so that in the end, the product development takes much less time.

- Saves money and reputation, since it helps to identify issues before they get discovered by end-users and cause trouble for the company.

- Boosts customer confidence, because end-users can trust and rely on the high-quality product.

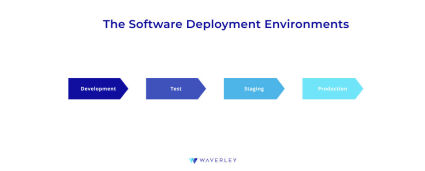

Deployment Environments

An environment is a system or collection of systems where we deploy a computer program and run it. A correctly configured process for deploying environments will be a benefit to your team.

Usually, during the development of websites, it is common to use 4 development environments: Development, Test, Staging, and Production.

Development Environment

There’s a specific environment for development. Each developer deploys his own version of the code from the local machine to the development environment.

At this point, the developers themselves check their changes and, if there are any inconsistencies or issues, fix them before it all goes to the test team.

Test Environment

The dedicated environment where the testing team works.

Their task is to execute the test cases prepared in advance. The QA team should test the functionality of the product version. As soon as the main part of the functionality is checked, the identified bugs are fixed, and the version of the product is stable, it can be deployed to the Stage.

Staging Environment

It’s a pre-production environment. It should be similar to the production environment. The QA team needs to verify that the new changes are displayed and work as expected and, only then, can they deploy new changes to the Production.

Production Environment

The environment in which the latest working version of the product is available for the target audience. Production should always be available to end-users.

To summarize, the main advantage of using multiple environments is building and testing a product in isolation. As a result, only a carefully tested product can reach the end-users, since most of the bugs are caught at various development stages in isolated environments.

Take Care of The Compatibility

It’s crucial to make sure your application works properly on different platforms/environments e.g. browser types and their different versions: desktop OS (Mac OS, Windows, etc), Mobile OSs (Android, iOS), mobile devices, etc.

The tested applications could work differently in different environments and may have environment-specific issues. For example, in my practice, I’ve seen a lot of cases when the UI of applications gets displayed with issues in Safari while in Chrome everything was perfect.

These kinds of errors usually happen because modern browsers have different browser engines which render front-end code in different ways.

There are three of the most widespread browser engines:

- Webkit (Safari)

- Blink (Chrome, Edge)

- Gecko (Firefox)

Knowing that it would be enough to cover all browser engines rather than cover all browser types. Moreover, sometimes issues can occur in the different versions of browsers since their creators continuously make improvements, which unfortunately could cause some problems with the application. That’s why the good practice here is to conduct system testing in the current and previous versions of the browser.

With this in mind, you can select browsers for their cross-browser compatibility to test more effectively.

If we are talking about compatibility testing of mobile apps, we should remember the fact that, nowadays, we have a wide range of mobile devices like Smartphones, Tablets, Smart devices, etc. that have different screen resolutions, performance, OS versions, etc. That’s why it’s very important to select the set of test devices, where testing will be conducted, very carefully. First of all, you should reduce the range of test devices by asking a customer about their preferences and expectations on which devices and OS versions an application has tool support. If you don’t have this information, you could start by gathering statistics on the most used mobile devices and OS versions on the internet.

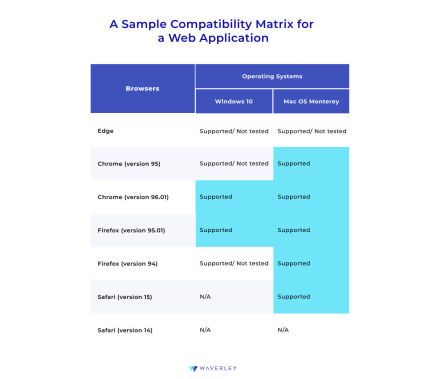

The next step would be to create a compatibility matrix with your selected environments: e.g. mobile devices, browsers, versions, etc., and team commitments.

This table represents the general team commitments regarding application support in different environments. Ideally, it should be discussed and approved by the customer to be on the same page with the team and have one source of truth.

What are the Levels of Software Testing?

Testing at all levels is the key to a successful quality project at the end.

There are 4 levels of software testing:

Unit Testing

Unit or Component testing is the first level of testing. A module is a small component. It is the process of testing each individual component of the system. Usually, this testing is done by the developers. To do this, they cover small parts of the code with unit tests.

Unit testing is performed on Dev or Test environments.

Integration Testing

Integration testing, as well as Unit testing, is performed on Dev or Test environments. But unlike the Unit testing, in integration testing, we check how the individual modules will interact with each other.

The main approaches to integration testing are:

- Top-down – first we check high-level modules and then head downward

- Bottom-up – first we check low-level modules and then head upward

Big-bang – all modules are tested simultaneously. Often used for small projects

System Testing

The third level of testing is system testing. Usually, it is performed in a test environment, but sometimes it can be performed on a Stage. We have to ensure that the entire system meets both functional and non-functional requirements. System testing is related to the Black box software testing method.

There are two approaches to System testing:

- Based on requirements – testing is carried out in accordance with functional and non-functional requirements, where test cases are written for each requirement

Based on use cases – testing occurs in accordance with the use cases of the product, on the basis of which user cases are created.

Acceptance Testing

The last level of testing. The main goal is to check the system for compliance with business requirements and verify its readiness to be transferred to end-users. Usually, it is performed by customers on Stage or Prod environments.

The main types of acceptance testing are:

- Alpha testing – it’s a type of acceptance testing, which is performed on the internal side(by the test team or sometimes by developers). In the Alpha testing stage, the product may have functional bugs, errors, and some inconsistencies with the requirements. So, during Alpha testing the found bugs are fixed, the likelihood of new bugs will be much lower, but a product may still have functional bugs.

- Beta Testing – starts after the Alpha testing. The product is almost finished in this stage and is ready for users. Usually, this testing takes about a week, up to a month. Beta testing is performed by a certain group of people – a target audience or any persons outside of the dev team. If any errors are found, they should be fixed.

To summarize, testing should begin with the smallest parts (modules or components) of the system, then the relationship between the modules, then the entire system, and at the end, the customer conducts acceptance testing.

Software Testing Process within a Sprint

Customers often ask us this question: “What kind of manual testing process do you have?”. Over the years, we have built and refined a certain structure that helps us ensure that our testing teams do their best in making sure the product is of high quality.

Here’s what the process of manual testing looks like within a sprint.

Requirements analysis and planning

This part is one of the most important ones. Usually, planning should be done a couple of days before the start of a new Sprint. At this meeting, the team should determine the goal of the sprint, the scope of work(which can also be called the sprint backlog), and estimate tasks that need to be accomplished. Definitions of ready and acceptance criteria should also be defined. Also, don’t forget about compatibility testing. Each project has its own number of devices, operating systems, and browsers on which you need to check the efficiency of the system. The better we understand the requirements and the planned scope of work, the better chance we have to achieve a great, final result.

After the approval of the requirements and planning, we must proceed with the test design activities: writing test cases, and checklists.

Test case creation

As soon as the sprint starts, the Dev team starts working on the implementation of the new functionality and the Testing team starts creating test documentation.

Test documentation should be kept in one place and should be available to all team members.

Setting up a test management tool will help to better navigate all your testing docs.

Most often we use Zephyr, Xray, or Test Rail – which is also a very popular system.

With these systems, you can write and store documentation in one place and can create Test runs for each environment. Each time you run a separate Test run, for example, for regression tests, or for smoke testing, it will analyze the duration and, in the future, will tell you how much time it takes on average for each Test to run and when the release date is most likely.

Test Case Execution and Defect Logging

Gradually, the dev team gives ready-made tasks for testing. In turn, the QA team begins to perform functional testing using previously prepared test documentation. Test activities should be performed in a separate environment – a test environment.

During the passage of test cases or checklists, we have to mark each as Pass or Fail and create a bug report on each Failed.

A good bug report should consist of:

- ID/Bug number

- Bug Title/Summary

- Description

- Steps to reproduce

- Actual result

- Expected result

- Screenshot or Screen recording

- Platform/Environment

- Severity and Priority

- Assignee

Retesting and Regression

Each fixed bug should be double-checked. Toward the end of the sprint, it is important to set the date for the code freeze. As soon as we have a code freeze period, we do not allow any code changes. The QA team can perform regression testing in isolation. The Test team must verify that the application still works as expected after code changes, updates, or enhancements.

Regression testing is usually performed in a Test environment, but it also could be performed in a Stage environment. Also, at this phase, the Component, Integration, and System testing should be completed.

Release and Test Report Sharing

The release is the final stage of a sprint that reflects the efforts of all team members. At this stage, a code from the staging environment deploys to the production environment where it would be used by the end-users and customers. That is why it is very important to conduct the release very carefully without any risks of damaging or losing the existing production data and make sure there are no blockers in the workflows. To avoid such risks, smoke testing of the production environment should be performed right after the production update to make sure that all critical functionalities are working as expected.

Based on our experience, it’s also important to use other best practices during the release:

- Try to avoid last-minute changes to release scope when regression or smoke testing is in progress. It is very important to consider a “code-freeze” approach with a development team and stakeholders.

- Do not conduct releases on Fridays, since there is still a possibility of issues appearing in the production environment that could affect some users. Allow for the development team to be able to address such issues as soon as possible.

- It’s important to consider the QA team’s feedback that can be collected after the regression testing is done on the quality of the planned-for-release scope when scheduling the release date. If the regression testing is not yet finished, it would be risky to conduct the release.

- Do not conduct releases while the application is in use by the end-users. The deployment process could be very time-consuming, which could cause some downtime in the application availability. For real-time applications, it could be crucial. That is why it’s important to know the time frames when the minimum amount of user activity in the application occurs, and conduct releases in these time frames.

- All team members should be available and focused during the release to be able to quickly fix any issues that may happen. At this stage, good and effective communication between QA Engineers, developers, and stakeholders is very important.

It would be useful to provide a test report after the smoke testing. Increasingly, customers are asking for such reports. Test report templates are different depending on the project and customer requirements. But what is important is to give information about the condition of the product in terms of quality. What features we have implemented, what was tested, and what was successfully released. Do we have any blockers? Perhaps we planned to release some functionality, but, for some reason, moved the implementation to future sprints? It all should be indicated in the report.

Support and Maintenance

After the release, the end-users start using the application and it’s possible that some issues could be identified by them. This could happen because of a variety of different environments (e.g. browsers versions, mobile devices, etc.) that could be used by real users and sometimes because of different approaches to using the application.

At this stage, it’s very important to track the issues/improvements from the end-users and customers to keep improving the application from a functional and usability point of view.

Usually, for each issue/improvement, the testing or support team creates new tickets in the bug-tracking system. Right after that, a QA team tries to reproduce the issue mentioned in the ticket and defines its severity, so that the development team and the customer can keep it in mind when planning future releases or hotfixes if there are critical or blocking issues.

Software Testing Documentation

Software testing documentation is a very important part of the quality assurance process on the project. Its main goal is to make software testing effective, trackable, and predictable for the development team and the stakeholders.

Let’s take a look at the most typical test documentation types.

- Test plan. A file that describes the test strategy, resources, test environment, limitations, and schedule of the testing process. It’s the fullest testing document, essential for informed planning. Such a document is distributed between testing team members and shared with all stakeholders. Test plans are usually created by test managers or senior software testing engineers at the early stages of the project initiation.

- Test strategy. This document reflects general approaches to system testing. As the project moves along, developers, designers, and product owners can come back to the document and see if the actual performance corresponds to the planned activities. Usually, this document is created as a part of the test plan. It contains information on the types of software testing to be used (for both functional and non-functional testing). Also, it specifies whether manual testing or automation testing will be conducted. If automated, then it will be indicated which automated tools will be used.

- Test case. A file that contains a set of actions (steps) to verify a specific functionality of a software application and its expected test results. Depending on the complexity of application functionality, test cases could also include preconditions, test data, image/video attachments, etc, so this makes this type of test documentation good for test automation. Test cases shall be created to test only ONE specific part of the functionality.

- Checklist. A file that contains a list of checks without specific steps, but with expected test results and statuses (Passed/Failed). This is a high-level document that is usually created in short-term projects when a test team does not have enough time for test cases creation.

- Traceability matrix. A file that maps functional requirements and test cases. All entities in the traceability matrix should have unique IDs. This document provides visibility for the development team and stakeholders of how many requirements are covered by test cases and how many remain.

In the early stages of the project initiation, it would be very important to choose the most appropriate software testing documentation as part of the software quality assurance process.

There are a few factors that could influence the choice of test documentation types:

- Project duration.

- A number of available software testers.

- Planned automation testing

- Project domain

- Corporate standards, etc.

For short-term projects (from 1 to 6 months): I would recommend using checklists and a test plan (if needed) because the creation and support of test documentation requires a lot of the testing team’s time. From my experience, short-term projects require a lot of flexibility and usually don’t have many free QA resources to build and support a wide test documentation strategy.

For long-term projects (6+ months): It’s better to choose the Test Plan + Test Cases + Traceability Matrix testing documentation strategy, because usually long-term projects have changes in application functionalities during the development and support, so they shall be reflected in the test documentation. Another reason for choosing the mentioned strategy is the possibility of automation testing that requires detailed test cases written by a manual testing team.

For healthcare-related projects, which could affect human lives, the Test Plan + Test Cases + Traceability Matrix strategy is required because they need a clear and formal testing process based on the well-defined test steps.

Where to Store Your Test Documentation?

Nowadays there are multiple ways to store various test documentation types. The most commonly used ways are Test management systems (TMS) and document editor systems (Google Docs, Microsoft Office, OneNote, etc).

For most types of test documentation, document editors are used as these doctypes do not require complex structures.

Test plan and test strategy usually have the format of a structured text and the main features that are needed for creating such documents are:

- Create, edit, and view simple word files

- Share documentation with others

- Keep track of update history (documentation versioning)

For the traceability matrices, however, these features are not enough, as this type of documentation is usually created in spreadsheet format. Thus, for this doctype, Excel or Google Tables applications are used, as they allow:

- Create large spreadsheet

- Filter and sort values in columns

- Hyperlinking

These document systems can be also used for creating and storing test cases and checklists, but such an approach has limitations:

- Impossible to set up automatic reporting creation

- No possibility of creating test runs

- No integration with bug tracking systems

- Hard to support large and long-term projects.

Modern test management systems do not have such limitations, as they were initially created to fulfill all needs of the QA Engineer’s routine:

- Reporting

- Integration with bug tracking systems

- Integration with automation software testing tools

- Creation and management of test cases and checklists

- Test run creation

- Versioning

- Collaboration in the testing team on test execution, etc.

There is a wide variety of test management systems (e.g. xRay, TestRail, Testlink, and others). When choosing the tool, these aspects should be considered: budget, features, ability to integrate with other systems, project duration, and the number of expected system users.

To tie it all together, a test management system is a way to build an efficient and predictable QA process, provide advanced visibility and transparency of test outputs for stakeholders. On the other hand, a document editor is a good solution that can cover basic needs for short-term projects or projects with restricted budgets.

Conclusion

Summing it up, we would like to say, once again, that Software testing is an integral part of Software development. And the quality and efficiency of testing directly depend on how the testing process is configured. A properly set up testing process will ensure that the software you develop is of high standards and meets all the requirements and needs of end-users.

Over the years, the QA team at Waverley has formulated the best practices for setting up testing processes that we use as mandatory on every project. It also allows us to successfully provide Software testing services to our customers.

Therefore, if you would like to set up the testing process correctly in your company or on a project, follow the tips above. But mind that this is only a short guide about the most important factors. That being said, don’t forget about such things as:

- Establishing Communication with the team and with the client

- Proper setup of test management tools for your bug-tracking system

- Reporting

- Conducting peer review in the test team

- Establishing Communication in the team and with the client

- Soft skills improvement

We also prepared a short Self-Check Quiz that will help you determine whether your testing process is on track. If you have any questions or need more detailed guidance, please reach out to us using the contact us form and we’ll be more than happy to organize a custom, on-demand consultation for you and your team. Good luck!

About the Authors