Loosely-Coupled System Design

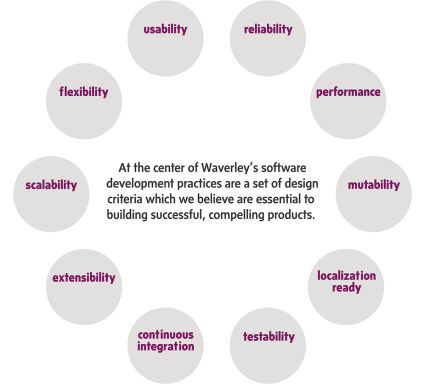

Halfway through Waverley’s corporate presentation is a slide listing ten design criteria that guide our developers in architecting and building products for our clients. These criteria include usability, flexibility, scalability, extensibility, testability, and others. A companion Waverley whitepaper, titled Agile UX Design, addresses the first of these. This whitepaper discusses several of the other terms and explains some techniques that enable us to manifest them in our work.

Building flexible, scalable, high-performing, extensible, testable systems

At Waverley these design criteria are sacrosanct. We believe every senior software engineer should embrace them when building a product expected to have a long life-cycle. But this list of values is often viewed as a set of tradeoffs: “scalable vs maintainable vs testable: choose one.” The question then becomes, “Is it possible to satisfy them simultaneously and without compromise?” The short answer is yes.

First let’s clarify the meaning of six of the most important criteria:

- Flexibility. Refers to the ability to change functionality without having to change all dependencies.

- Scalability. Refers to the ability of a system to handle proportionally more load as more resources are added.

- Performance. Refers to the ability to support information exchange with minimal overhead. Performance considerations typically include latency, jitter, throughput, and processor loading.

- Extensibility. Refers to the ability to extend a system with new functionality with minimum effort.

- Maintainability. Is an umbrella term covering multiple criteria appearing in the “Waverley wheel” on page 2. Maintainability means that system design activities and deployment scenarios will allow performance of maintenance activities with minimal effort. Typical maintenance activities include error correction, enhancement of capabilities, removal of obsolete capabilities and optimization.

- Testability. Is the degree to which a system supports testing in a given test context. A lower degree of testability results in increased testing effort.

Performance is case-by-case

Performance is a practical concern that depends heavily on platform, system design, infrastructure and details of implementation. The approaches required to improve performance are very different in iOS, Android, Windows 8, hybrid, desktop, CSA, cloud-based systems, and so on. Making a system perform well requires a deep understanding of platform and infrastructure-related issues.

Unlike the criteria (flexibility, scalability, extensibility, etc) to which the rest of this paper is dedicated, there is no “conceptual” or general solution for improving performance other than “choose the right expert to do the job.” At Waverley those experts are in-house.

General principles to satisfy the remaining criteria

The age-old concept “divide et impera” (divide and conquer) provides a clue. The Romans were very sophisticated in applying this principle to build an empire lasting over a millennium. Back then, it meant breaking up existing power structures to prevent smaller groups from linking up. If we apply this idea to software design, it suggests breaking up internal and external dependencies in order to gain modularity and lower system complexity. We call this approach loosely-coupled system design.

Coupling refers to the degree of direct knowledge that one system component has of another. A loosely-coupled system is one in which each of its components has, or makes use of, little or no knowledge of the properties and characteristics of other separate components.

Building a loosely-coupled system

The problem is that classic object-oriented programming (OOP) and interface-based architecture is by nature tightly-coupled. There are hard dependencies throughout: inheritance, utilizing APIs, constructor parameters, helper classes that become “god-objects” further on. If we let those dependencies grow the system soon turns into an unsupportable complicated tangle of code.

Two key things can and should be done to avoid tight-coupling:

1) Keep dependencies to a minimum

2) Separation of concerns (see: en.wikipedia.org/wiki/Separation_of_concerns)

There are several techniques that allow decoupling OOP architectures which are naturally tightly-coupled. The most powerful is inversion of control (en.wikipedia.org/wiki/Inversion_of_control) of which there are implementations for all major platforms (Castle Windsor, Unity, Autofac, Spring, Spring.NET, Ninject, etc). These are relatively easy to master and dramatically decrease the number of dependencies in a system while rendering the system more testable.

Composition over inheritance (en.wikipedia.org/wiki/Composition_over_inheritance) is also helpful to make a system more flexible and reusable, and is often used in business logic modelling.

In addition to there are multiple decoupling design patterns which resolve different types of dependencies. Among these are abstract factory pattern, factory method pattern, bridge pattern, and observer (wiki articles are available for each).

As for flexibility, decreasing the number of dependencies inherently increases system flexibility, so all techniques mentioned above contribute positively to flexibility.

Shifting the paradigm

Raising the bar further, we can ask ourselves whether there is an engineering paradigm which, in the same way that OOP is tightly-coupled by nature, is instead naturally loosely-coupled. Again the answer is yes: “Data-Oriented Programming” or DOP as described by Eugene Kuznetsov of Datapower Technology (now part of IBM).

DOP is based on the notion that data (as a category) is the only invariant in a loosely-coupled system. This invariance, seen as a measure of reliability, suggests that data be used as the sole intermedium (connection link) between software components. In other words data is primary, while operations on the data are secondary. Since a common “logical” address space cannot be assumed, the information exchange between components is based instead on a message-passing interaction paradigm.

DOP is complementary to OOP and provides a solid foundation for constructing loosely-coupled systems. DOP can be described as a publish-subscribe design pattern (en.wikipedia.org/wiki/Publish-subscribe_pattern). In this model, a system component subscribes to a data type or types which it can consume as an input, then does its work and publishes the results back to a Data Bus, from which maybe some other component will pull the data, using it as input. Again the main idea is data as an interface – system components need and should know nothing about each other – they only define types they can consume and produce. It’s up to the Data Bus to decide about message routing.

The benefits of such a model are enormous:

- Extensibility – it’s easy to add new subscriber which will perform some new functionality.

- Scalability – we are able to scale our system horizontally.

- Maintainability – since components are completely decoupled it is easier to localize errors and improve component functionality.

As a bonus we get incremental and independent development – typically application development performed by a compound team (aka a team of teams) of developers separated in space and even across time zones. The productivity of such compound teams is directly related to the ability of each developer to work pretty much independently, without blocking or compromising the work of another. DOP also allows for each application sub-system to be under an independent domain of control – both from an operational as well as a management perspective. Using a data-oriented approach we can split our system into pieces and work on its implementation in parallel on different continents having only a data structure in common.

The observer pattern mentioned above is compatible with the data-oriented approach – it proposes that the system “watch” a data model to then “react” to its changes. It is very suitable for building inter-component communication.

While building distributed Web applications it makes sense to choose a REST protocol for communicating with the server side since this protocol treats data as the “first class citizen” and supports the data-oriented approach.

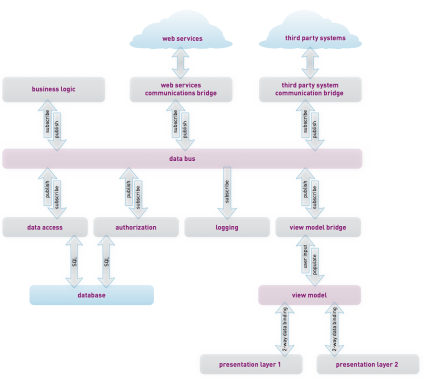

A generic data-oriented, loosely-coupled system design can be represented visually as shown in the diagram on the following page.

The “Data Bus” is the key element of this architecture and is responsible for message (data) routing. It stores all subscriptions and watches for publishers. When a message arrives all subscribers looking for this particular data type are notified. The Data Bus can be implemented in many different ways and it is highly dependent on chosen technologies, system requirements and system size.

At the moment (late 2013) one of the most sophisticated data bus implementations for real-time systems is called DDS (Data Distribution Service), based on the OMG DDS standard (omg.org).

And here: https://www.rti.com/industries/aerospace-defense we see that DDS is a mandated standard for publish-subscribe messaging by the U.S. Department of Defense and the Information Technology Standards Registry. Programs that have adopted DDS include:

- U.S. Navy’s Open Architecture Computing Environment (OACE) and FORCEnet

- U.S. Army’s Future Combat Systems (FCS)

- Joint Air Force, Navy and DISA Net-Centric Enterprise Solutions for Interoperability (NESI)

Given that data-oriented and even data-centric system design is already adopted in the most advanced areas of engineering Waverley feels it is well worth considering in conventional systems.

About the Author