Common AI Implementation Mistakes: From Strategy Gaps to Sustainable Adoption

One of the primary reasons companies are unable to convert AI investments into tangible commercial value is the persistence of common AI implementation mistakes. Even though AI has advanced far beyond experimentation, many companies continue to encounter persistent implementation issues that restrict long-term value, increase risk, and limit adoption.

A large number of these mistakes are triggered by various factors, including ambiguous objectives, insufficient data foundations, unrealistic expectations, and more. Throughout AI transformation initiatives, where strategy, technology, and people fail to advance together, these adoption errors frequently come to light.

This article examines the most frequent AI implementation errors businesses make today, highlighting important issues with AI integration and the risks that often jeopardize success. More significantly, it describes how alignment, governance, cooperation, and sustainable AI policies can help prevent AI deployment errors.

Contents

- Lack of Clear Business Objectives

- Poor Data Quality and Data Management

- Inadequate Understanding of AI Capabilities and Limitations

- Choosing the Wrong AI Solution or Technology

- Security Risks and Model Vulnerabilities

- Lack of Skilled Talent and Cross-Functional Collaboration

- Poor Model Deployment and MLOps Practices

- Weak Change Management and User Adoption

- Scaling Too Fast or Too Slow

- Conclusion: How to Avoid These AI Implementation Pitfalls

1. Lack of Clear Business Objectives

An MIT study found that 95% of GenAI pilots fail due to unclear business objectives, underscoring the critical importance of defining a problem before implementing AI. Companies frequently adopt AI because it is seen as a strategic necessity rather than because it addresses a specific need. In certain situations, AI becomes a problem-solving tool.

Success becomes challenging to gauge when AI activities are not aligned with specific goals, like lowering operating expenses, increasing customer retention, or speeding up decision-making. Teams may create models that are technically excellent but have no bearing on actual business outcomes. Executive confidence in AI investments is low, and efforts are dispersed when AI is viewed as a trend rather than a business tool.

Strategy, not technology, is where successful companies start. They establish quantifiable objectives, pinpoint areas where AI may actually offer value, and guarantee alignment with overarching business strategies.

2. Poor Data Quality and Data Management

The effectiveness of AI systems depends on the data that powers them. It’s a prevalent fallacy that AI performance improves with more data. In actuality, inaccurate or deceptive results are frequently produced by low-quality data, such as duplicate entries, inconsistent formats, missing labels, or biased sampling.

The effort needed for data preparation, ownership, and governance is often underestimated in AI projects. Models deteriorate rapidly and lose stakeholder credibility in the absence of explicit accountability for data sources and quality criteria.

Companies that succeed with AI make significant investments in data foundations, including data governance frameworks, standardized pipelines, and ongoing data quality monitoring. Although they might not be very obvious, these initiatives are essential for long-term success.

3. Inadequate Understanding of AI Capabilities and Limitations

Unrealistic expectations, or as PMI said, “Overpromising Results”, present another significant obstacle. AI is frequently thought of as a plug-and-play solution that can produce immediate, nearly flawless results. This misconception causes disappointment and early abandonment of AI projects.

Instead of being deterministic, AI systems are probabilistic. They need human supervision, monitoring, and iteration. It’s also easy to confuse automation with intelligence; not all automated processes need machine learning, and not all ML models produce useful intelligence. This distinction becomes particularly relevant in technical domains such as software development, where AI-assisted tools can enhance productivity but still require secure workflows, human oversight, and clear governance boundaries, as explored in Waverley’s article on AI-assisted engineering in modern software development.

In production settings, where real-world data frequently differs from training data, overestimating model accuracy or generalization can be especially harmful. Instead of viewing AI as a one-time deployment, organizations need to view it as a developing capability.

4. Choosing the Wrong AI Solution or Technology

Overengineering is often the result of technology decisions motivated more by hype than by requirements. Sometimes, more straightforward statistical or rule-based methods would be more successful, comprehensible, and economical than complex deep learning models.

Furthermore, choosing proprietary systems without considering integration and flexibility can lead to vendor lock-in, which restricts scalability. Business requirements, data maturity, legal restrictions, and long-term maintenance concerns should all be taken into account when selecting AI technology, not just market trends.

5. Security Risks and Model Vulnerabilities

Risks related to ethics, law, and security are now major implementation issues in AI projects rather than secondary ones. Due to the close connections between bias, fairness, data privacy, transparency, and system security, shortcomings in any of these areas might jeopardize long-term adoption, compliance, and trust.

AI systems that have been trained on insufficient, prejudiced, or poorly managed data may perpetuate current disparities and yield unfair results. However, AI-driven decisions are hard to defend due to their lack of explainability, especially in regulated sectors like insurance, finance, and healthcare. Companies that need to manage sensitive data responsibly can learn more about practical strategies for privacy-aware AI implementation in Waverley’s article on AI in data privacy.

AI creates additional attack surfaces from a security standpoint. Inadequately secured models and pipelines may allow unwanted access, reveal private information, or become targets of adversarial assaults and model theft. The hazards associated with using AI are greatly increased by inadequate monitoring, unprotected APIs, and weak access controls.

Integrated governance frameworks that cover ethics, compliance, and security collectively are necessary for the responsible implementation of AI. Instead of being implemented as reactive measures after deployment, bias evaluation, privacy protections, explainability methods, and security controls must be integrated throughout the AI lifecycle.

6. Lack of Skilled Talent and Cross-Functional Collaboration

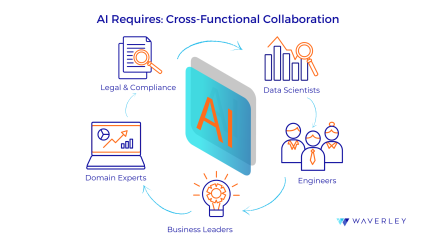

AI is more than just a data science issue. Projects that solely depend on technical teams frequently fall short in capturing domain context, leading to models that are operationally irrelevant but mathematically correct.

Close cooperation between data scientists, engineers, subject matter experts, legal teams, and business stakeholders is necessary for successful AI projects. Poor adoption, ambiguous performance measures, and misplaced expectations can result from communication gaps between technical and non-technical personnel.

Businesses are better positioned to integrate AI into regular workflows if they invest in AI training and upskilling across departments, not just technical teams. This broader organizational focus is essential for long-term success, as effective AI adoption depends on readiness, ownership, and alignment across people and processes—key principles explored in Waverley’s perspective on the key to AI enablement.

7. Poor Model Deployment and MLOps Practices

Inadequate operational planning causes many AI projects to stall after their first deployment. Over time, accuracy declines when model drift, shifting data distributions, and performance deterioration are ignored.

AI systems become brittle and challenging to maintain in the absence of appropriate MLOps procedures, such as version control, monitoring, automated retraining, and rollback methods. A common and costly error is to treat AI models as static artifacts rather than living systems.

8. Weak Change Management and User Adoption

If users don’t trust or embrace well-designed AI systems, they will fail. Adoption can be severely hampered by a lack of openness regarding AI-generated outputs, resistance to change, and fear of losing one’s job.

Businesses frequently undervalue how crucial it is to get consumers ready for AI-driven workflows. Building trust and long-term adoption requires clear communication, training, and early end-user involvement in the design process.

9. Scaling Too Fast or Too Slow

While some businesses rush to grow AI solutions before determining their value, others stay in pilot mode and are unable to move from testing to production.

Both extremes are troublesome. Adopting AI successfully requires a well-defined plan that strikes a balance between experimentation and long-term goals, enabling businesses to grow gradually while staying on track with their goals.

Conclusion: How to Avoid These AI Implementation Pitfalls

It takes more than just technical proficiency to prevent AI implementation errors. It necessitates disciplined operational procedures, ethical responsibility, cross-functional cooperation, solid data foundations, and strategic clarity.

Successful organizations view AI as a long-term competency rather than a temporary endeavor. Businesses can create scalable, reliable, and significant AI systems by integrating AI initiatives with business objectives, investing in people and procedures, and implementing appropriate governance frameworks.

At Waverley, we help companies move beyond experimentation and avoid common AI implementation mistakes by designing AI solutions grounded in real business objectives. From data strategy and model development to secure deployment, MLOps, and responsible AI governance, our teams partner closely with clients to build scalable, production-ready AI systems that deliver measurable value.

Whether you are starting your AI journey or looking to mature existing initiatives, Waverley brings the technical expertise, strategic alignment, and cross-functional approach needed to turn AI into a sustainable competitive advantage. Let’s connect now.

Ready to turn AI into real business value?