AI in Robotics: Benefits and Tips of AI-Driven Robotics Development

Contents

Technology helps people work faster, better, and more efficiently. However, having and operating a machine that can perform certain automated tasks is not enough anymore. We want it to be able to see, perceive, and think just like we do. Now we need robotic assistants that can detect and identify objects, analyze their context, make autonomous decisions, and perform physical actions based on these. And what used to be sci-fi just half a century ago is our new reality today due to AI in Robotics.

Similar to how the introduction of assembly lines contributed to the Industrial Revolution, the collaboration of Robotics and AI is now disrupting business operations. Able to process visual and audio data, understand natural language, and make decisions in real time, robots help increase business productivity, reduce costs, eliminate the human factor, and boost workplace safety.

In this article, we will discuss how AI works for Robotics, which industries benefit from the application of AI in Robotics today, and what challenges and opportunities are for the technology. We will also look at some examples of AI in Robotics and see how Waverley works on such projects.

What is AI in Robotics: Overview

There can be some misconceptions that anything that is AI-powered is a robot or that all robots are smart (i.e. AI-powered). In fact, any computer system can make use of AI provided it has enough computing power and is running the necessary AI algorithms and models to complete “intelligent” or “human” tasks, such as processing visual data, understanding natural language, analytical thinking, decision making, and more. On the other hand, robots do not necessarily need AI to operate – they can be pre-programmed for certain repetitive tasks using other kinds of algorithms and calculations or be manipulated by an operator.

Thus, Artificial Intelligence is the branch of computer science that focuses on how machines can be taught and learn to perform complex intellectual tasks, including perception, human language processing, reasoning, decision-making, and even emotional intelligence.

In turn, Robotics is a subset of mechanical engineering dealing with the design and construction of mechanisms that can move and interact with humans to help them complete physical tasks.

With AI application in Robotics, we come to enjoy the multitude of autonomous smart machines that can help humans, or substitute them, in performing repetitive, physically demanding, or dangerous tasks. The most common example of AI in Robotics could be AI-powered robotic vacuum cleaners. Unlike their less advanced counterparts, they are furnished with cameras that allow the smart robotic system to detect and measure distance to objects near it in real time to avoid obstacles.

How is AI used in Robotics?

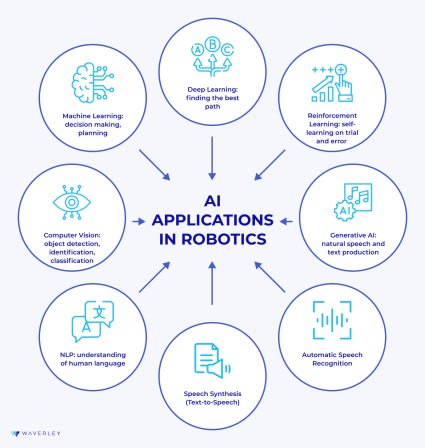

Now, let’s take a look at how exactly AI technologies can work for robotics, making them truly autonomous and smart.

First of all, for robots to work with AI, they need to be equipped with hardware with plenty of computing power to handle AI workloads. Depending on the robot’s design and measurements and whether it will be handling general-purpose or specific-purpose workloads, computing can be done onboard or in the cloud. With on-board computing, real-time data processing can be done with practically no restrictions and without any internet connection. Meanwhile, cloud computing solutions can help design AI-powered lightweight robots but they will require stable network connectivity to function properly.

Next, there are various AI technologies and algorithms designed to solve data processing problems for robots to resemble human-like perception, reasoning, and behavior:

- Deep Learning. This is an evolution of Machine Learning that uses thousands of times more data for learning and its deep neural networks with millions or billions of parameters under the hood. While Machine Learning is mostly focused on solving tasks with flattened input vectors and in most cases fairly limited sizes of the internal number of parameters, the task of Deep Learning is to make the most out of complex tensor inputs, covering complex structural data, like images, speech, and texts and having almost unlimited sizes of internal representation spaces. This leads to unprecedented capabilities to extract hidden patterns from enormous data sets.

- Reinforcement Learning. A subset of ML, this approach is particularly applicable to robotics as it resembles the trial-and-error learning approach where the system receives negative or positive feedback following its actions and can improve through self-learning. A hands-on example of RL application in robotics is teaching legged robots to walk: it may take as little as up to 10 hours to make it walk on its own.

- Computer Vision. Relying on data science and data labeling, machines are trained to perceive images and video they capture – that is identify, detect, and classify different objects from this visual content. Powered with CV models, robots can detect movements, recognize faces and emotions, inspect the surroundings, and so on.

- NLP, ASR, TTS. The Natural Langage Processing technology provides robotic systems with the ability to perceive and interpret text written in human language. In combination with Automatic Speech Recognition, computer systems can recognize human speech. This way, robots can not only understand direct commands but also extract meaning from the variations of human expressions, perform sentiment analysis, and come up with a relevant text response, or a verbal one with the help of Speech Synthesis.

- Generative AI. One of the most recent developments, Generative AI in Robotics brings machines closer to humans by making them able to produce unique text or, in conjunction with Text-to-Speech, – express vocally like a real person. Today, ChatGPT integration is booming in the sphere of personal digital and robotic assistants, social robots, and consumer robotics as an example of generative models.

Summing this up, the application of AI in Robotics makes it possible for machines to be autonomous and adaptive using their sensors – cameras, accelerometers, vibration and proximity sensors, etc. They can understand their context in real-time, act accordingly, and learn from new data, improving their behavior.

Understanding the Benefits of AI in Robotics

Businesses, organizations, governments, and individual users are opening up endless opportunities in the application of Artificial Intelligence in Robotics. Statistics show that as soon as by the end of this decade, more than a quarter of the global GDP will be generated by AI. Meanwhile, the AI-driven robotics market size is projected to develop between 2024 and 2030 at an annual growth rate of 11.63%, earning US$36.78bn by 2030.

In this section, we take a closer look at the benefits of AI for Robotics and global business growth.

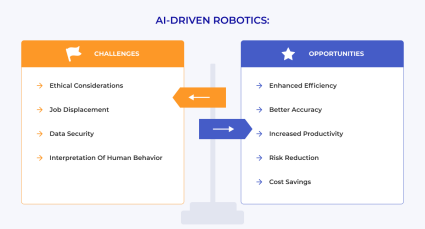

1. Enhanced Efficiency

Powered with AI, robots function autonomously or semi-autonomously which means they no longer need to be controlled by an operator all the time. Thus business owners can finally delegate time-consuming, repetitive, and mundane tasks to robots, freeing up their human resources for higher-level work that requires skills robots cannot provide. Also, autonomous robots can work 24/7, without taking shifts, lunch breaks, sick leaves, and vacations, ensuring uninterrupted service and product delivery.

2. Improved Accuracy

The AI technologies mentioned above, especially deep learning, reinforcement learning, and sensor fusion contribute greatly to increasing the accuracy of intelligent machines. With self-learning algorithms enabling them to learn from experience and advanced sensor data processing in real-time, dexterous robots can manipulate their limbs and adapt grip force well enough to cut and cook food, assemble small and fragile mechanisms, and even perform open-heart surgeries with better precision than humans.

3. Increased Productivity

This advantage of Artificial Intelligence for Robotics comes logically with the prior ones – more efficient and accurate work, in the long run, brings faster results with fewer defects and losses. Additionally, machines’ work efficiency won’t be affected by physically challenging conditions, such as extreme temperatures, heights, weight loads, or lack of light and fresh air.

4. Risk Reduction and Safety

Hybrid automation and AI in robotics are game-changing for domains and environments with increased risks for human health and life. Such critical activities as working with hazardous chemicals and organisms in labs, rescue operations in dangerous locations, and aid in military and anti-terrorist missions can become much safer. Also, intelligent robotic systems increase worker safety in potentially harmful environments, such as plants and factories, or ensure patient wellness making high-precision procedures less invasive.

5. Cost Savings

An AI-powered robotic system may look like a bulky investment, but it is a one-time investment. It won’t ask you for salary increases, bonuses, perks, and social benefits. A fleet of robots in a warehouse or at a manufacturing facility can do the job of dozens of workers with increased efficiency and precision while it will only need a handful of specialists for support and maintenance.

Future Trends and Challenges of AI and Robotics Integration

Of course, like in any sphere, the use of AI in Robotics also faces certain challenges that NGOs and researchers, such as the Trustworthy Autonomous Systems Hub, are working on to resolve. The challenges posed by the implementation of AI for robotics software development include the following:

- Ethical Considerations: at this point, it is hard to predict whether decisions made by an AI system will be ethical from a humane and cultural perspective. Because ethics and moral views are rather subjective and culture-bound, they are tricky to be taught objectively to a machine. So far, to solve this complication, AI is being trained using filtered data and robots’ independence is limited.

- Job Displacement: as robots become more likely to take over tasks that are done by people, we can see some professions become unnecessary and workers left without jobs. A way to look at it is to realize that technological advancements make certain skills redundant (today, many past professions don’t exist anymore) but, at the same time, make new qualifications needed and occupations available.

- Privacy and Security: AI-powered robots are computer systems that collect our data, including sensitive information, as well as process and store it. They are also connected to the World Wide Web which makes them prone to security breaches. Or malicious actors may gain control over robotic systems, making them potentially harmful to people. However, this challenge is pretty universal for other computer systems as well and there are plenty of security best practices, policies, and strategies developed by the engineering community to mitigate it.

- Interaction with humans: this challenge arises at the intersection of computer science and psychology, highlighting the fact that human behavior can be unpredictable. Thus, intelligent robots should be able to deal with it without breaking down or harming people. Finding a way to teach emotional intelligence to machines could be a solution to this issue and also enable robots to establish meaningful connections with people.

Read our updated article on Top 25 Coding Errors Leading to Software Vulnerabilities to make sure you don’t put your cybersecurity at risk.

AI Applications in Robotics Across Domains

With the abovementioned benefits of AI-powered robotic systems in mind, we now may consider some of the actual use cases for these smart machines and the way they help businesses from different industries achieve better results.

| Domain | Application | Impact |

|---|---|---|

| Manufacturing | Quality Control |

|

| Collaborative Robots |

| |

| Autonomous Robots |

| |

| Assembly Robots |

| |

| Aerospace | Autonomous Rover |

|

| Robotic Companions |

| |

| Disaster response | Advanced Drones |

|

| Transportation and delivery | Advanced Drones |

|

| Agriculture | Advanced Drones |

|

| Healthcare | Robotic Assistants |

|

| Robotic Surgery |

| |

| Service Robots |

| |

| Customer Service | Social Robots |

|

| Service Robots |

| |

| MilTech | Unmanned Aerial Vehicles |

|

| Unmanned Ground Vehicles |

| |

| Smart Home | Service Robots |

|

| Personal Robotic Assistants, Social Robots |

|

AI and Robotics: Real-World Examples

Naturally flowing from AI robotic applications in various domains, it’s time we reviewed some of the most prominent implementations of AI used in robotics.

Autonomous Mobile Robots (AMRs)

These are usually wheeled robots that can move around an environment independently, using their 2D and 3D cameras to localize themselves, process this data in real time, and complete some actions based on their decision-making capabilities. They are most often used in warehouse and manufacturing environments, helping workers move heavy loads at long distances, such as collaboration robots, inventory transportation robots, and storage picking robots. Interestingly, autonomous cars also belong here.

Bottobo

This AMR solution is also a collaborative robot for supply chain and logistics, which means that in addition to autonomous work and navigation around the facilities, it adapts to human behavior. At the same time, Bottobo’s proprietary Warehouse Intelligence System integrates with other client’s business applications to provide them with business intelligence features.

[source: https://www.bottobo.com/]

Ideasparq

The company delivers a range of AMR models with different designs and functionality for a variety of applications, both commercial and industrial. As an AI in robotics example, their Autonomous Floor Scrubber for cleaning large surface areas is adaptable to the environment and, in addition to autonomous navigation, path planning, and obstacle avoidance due to their Lidar sensors and cameras, can analyze and optimize its movement patterns and performance using data analytics and ML.

[source: https://www.ideasparq.com/amr]

Robotic Arms

This type of robot is specifically designed to perform such tasks as autonomous collecting and moving of items, or assembling and crafting mechanisms. AI algorithms help articulated robots learn to control their manipulations and pressure on objects more accurately. Such robots as Cartesian robotic arms type or SCARA are usually bulky and used in industrial settings, while Articulated (or Jointed) robots may have broader applications, including healthcare, prosthetics, and even household.

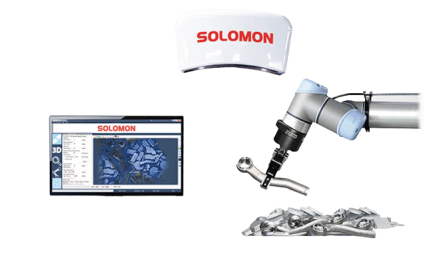

Solomon

Experienced professionals in 3D vision systems (since 1990), today this company provides a range of robotic arm models for industrial automation. Powered with proprietary AI vision software, Solomon’s vision-guided robots can efficiently do object picking and placing, material handling and positioning, and defect inspection and repair.

[Source: https://www.solomon-3d.com/accupick-3d/]

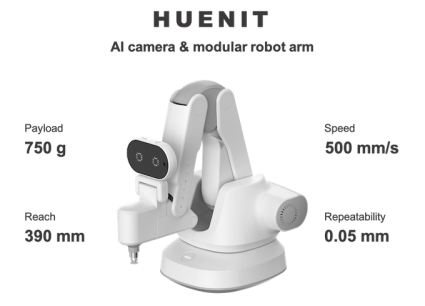

Huenit

This is a Kickstarter project for an AI-powered high-precision articulated robot for a household or a small business. It is equipped with a camera and microphones with computer vision and voice recognition capabilities. Its robotic arm is modular, so it can serve as a 3D printer, laser cutter and engraver, vacuum gripper, pen holder for writing and drawing, and more, depending on the model in use.

[source: https://www.kickstarter.com/projects/huenit/huenit-ai-camera-and-modular-robot-arm]

Cobots

Cobots can be shaped in different ways – as fixed robotic arms, humanoids, legged and wheeled robots. Their main purpose is to function adequately in collaboration with a human, hence “cobot” – a collaborative robot. Cobots rely on AI technologies to be able to identify, process, and respond to human speech and gestures, and even learn from them. They are designed to make work easier for human operators when it comes to extreme weight lifting or precision.

Techman AI Cobot

This is a series of armed robots for the manufacturing industry, enhanced with AI vision technology to better feel the environment they operate in. The company has developed tailored TM AI+ Training Server, TM AI+ AOI Edge, and TM Image Manager software to enable clients to train AI models for their cobots, increase their accuracy, and deal with factory deployment.

[source: https://www.tm-robot.com/en/tm7s/]

Legged Robots

The complexity of the legged robots lies in their legs: the most challenging task for robotics engineers and researchers is to make their limbs articulated well enough to provide stability and speed. Thus, although such robots can deal with rough terrains with more versatility than their wheeled counterparts, they tend to be less efficient in terms of performance and power consumption.

Spot

One of the most famous developments of Boston Dynamics, this robo-dog can walk and navigate around many types of environments, especially outdoors, climb stairs, open doors, and, when equipped with an additional robotic arm, pick and put down objects. It already has applications for delivering visibility in hazardous environments, providing remote inspection, and construction site monitoring.

[source: https://bostondynamics.com/products/spot/]

Atlas

It is one more creation from Boston Dynamics that blew our minds for the first time when we saw it dancing. Having incredible abilities to run, jump, skip, and even flip quite smoothly due to advanced hardware and an AI-powered control system, Atlas is known today as the most dynamic robot in the world, fighting the constraints of this type of robot. So far, it has been used for purely research purposes, helping robotic engineers study and push the limits of robotic mobility.

[Source: https://bostondynamics.com/atlas/]

Tips for Successful AI-Driven Robotics Development

As a company with strong expertise and experience in Robotics development services and AI development services, Waverley Software has to share some practical insights from the domain of AI robot development.

Choosing the Right Hardware

Speaking of AI-powered Robotics, we can define two types of hardware we will need for AI-Driven robotics development:

- Computing hardware

- Robot Hardware

As we’ve mentioned earlier, the computing hardware you choose for AI workloads can be general-purpose and specific-purpose. The former will require more computing power to process and will be more expensive while the latter can be more lightweight and cheaper. For example, Luxonis, the creators of a single embedded ML and CV platform, create advanced cameras with on-chip processing, which allows robotics enthusiasts and startups to save on general-purpose computing hardware. Because these cameras have the hardware capacity to run various computer vision models themselves, robot creators don’t have to allocate additional computing resources for this on the robots.

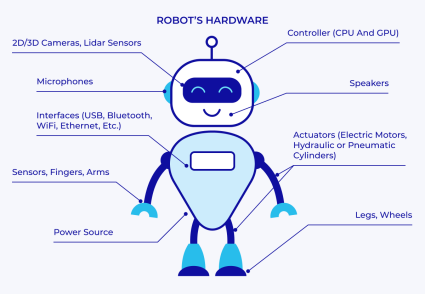

Meanwhile, hardware making up the robot’s body and ensuring its movement includes a massive variety of models and elements. Depending on your desired application for the robot, you can choose from a variety of parts, such as robot bases, joints, flanges, motors, propellers, and drives to build humanoid robots, aerial robots, arms or legs only, platform-like robots, wheeled robots, or mixed robots of different sizes.

In addition to “body” parts and the “brain” robots can’t do without such hardware elements as sensors for data collection, actuators for part movement, communication interfaces for information exchange with other devices and software, power sources, and, of course, controllers – micro- or mini-computers that are programmed to control the robot’s movements and actions. For the robot to be able to see and hear, it will need a camera and a microphone.

Programming the Robot

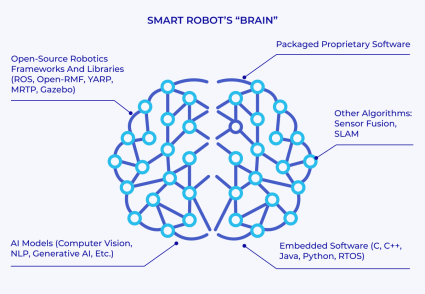

To make a robot move and respond to even basic commands, it has to be programmed. Typically, robotic hardware, such as sensors, actuators, and interfaces come with pre-installed drivers. But to make all the elements work together and “know” about each other, we need to program the controller. In many cases, robot controller software is packaged with the hardware by manufacturers and tailored to the specific purpose of the robot model.

However, engineers may also be able to program the robot using programming languages, including C++ and Python, as well as robotics software development kits and tools, such as ROS, Nav2, SLAM toolbox, and MoveIt.

Such algorithm as Sensor Fusion is used for combining data from the numerous sensors a robot has to increase the robot’s accuracy and reliability. Simultaneous Localization and Mapping (SLAM) relies on sensors to help the robotic system create a map of the environment and locate itself within this environment. Autonomous navigation algorithms help robots in path planning and navigating around the surroundings using the data collected from the sensors.

Robot programming also overlaps with embedded systems and IoT development. Embedded development is integral to robotics as it caters to such tasks as enhanced control for very narrow, specific functions of robots, for example, movement accuracy or sensor control, leveraging RTOS programming as an option.

IoT development, which is also rooted in embedded systems, converges with robotic system development in terms of communication and data exchange with other, connected, devices. This can be mirrored in robot fleet management and synchronization in robotics. Also, as in IoT devices, data collected from sensors is used by robots for performance and environment analytics enabling them to improve their autonomy. Altogether, advanced control systems and device communication push forward AI and IoT-based intelligent automation in Robotics, making machines safer and more accurate.

Robot programming can be done through a web or desktop interface as well as from an IDE via a remote SSH session. Developers can set up visual dashboards reflecting the robot’s hardware performance and analytics, streaming content from the robot’s sensors in real time, containing a UI for robot controls, and more. This can be done with any front-end development technology or using ready-made platforms, such as Formant.

There’s also a way to program robots by literally teaching them the moves through demonstration by an operator or device called teaching pendants. This is a process reversed to ‘traditional’ programming, where instructions are put down into the system following the robot’s actions and movements.

Another way is called offline programming which is done without connecting to the robot on a computer using CAD systems and simulators. When the system is designed and tested in the virtual environment, it gets deployed to the robot.

Looking Around AI Algorithms

Same as with hardware, your robot’s software depends on the area of application and the purpose of using AI for robotics you have in mind. Some hardware, like the cameras and microphones, come with embedded software. Manufacturers may provide out-of-box development kits, specifically built for AI applications in robotics packed with ready-made ML models, libraries, documentation, and knowledge base, for example, DepthAI or Intel’s RealSense platform.

If you choose to move your computing to the cloud, then you will have the choice from the range of AI technologies and models that solve general ML tasks cloud vendors have to offer. For example, if you want your robot to be able to hold a conversation, you may try using existing services that provide chatbot experience, such as GCP’s Dialogflow or Amazon’s Lex, or deploy your own conversation engines leveraging Meta’s Llama2 in any cloud. You can also create your own ML models and algorithms with Amazon’s no-code solution SageMaker Canvas, Microsoft’s enterprise-grade platform Azure Machine Learning, or GCP’s Vertex AI.

Data Collection and Preparation

This is more of a data science part of the AI robotics development process. When collecting and preparing data for your robot, it is important to take into account the following key aspects:

- The purpose, application area, and functionality of your robot will define the data scope it needs to train on. For example, if it is meant to harvest crops, you will need to train its CV model using the dataset of different crop images to enable the robot to distinguish the fruit from the leaves, the ripe fruit from the unripe one, and so on.

- Data annotation and labeling will be needed for “raw” datasets. There are online resources that offer annotated CV datasets, such as ImageNet or CIFAR-10, but if your application field is too niche or existing datasets are not comprehensive enough, you will most probably have to label your training data yourself using such tools as Doccano, Prodigy, and Label Studio, to name a few.

- Data augmentation. If your robot’s environment lacks data that can be collected naturally, consider data augmentation – the increase of your existing dataset for the system to better handle unseen situations.

- Dataset balancing is business-critical. If certain data categories happen to be underrepresented, you risk making your model biased and erroneous.

- Consider ethical issues. The data you collect and the way you label it will directly impact how your robot might interact with humans – it may turn out to be offensive, discriminative, or reveal some sensitive information.

On Waverley’s blog, you can find an in-depth guide on Data Collection for Machine Learning for actionable insights and strategies.

Testing and Iteration

Similar to regular software testing, robotics systems go through such classic test levels as component, integration, system, and acceptance testing. These help developers make sure robots perform the intended functions and follow the typical use case scenario, meet the projected performance requirements, consume resources adequately and are user-friendly enough.

However, unlike software QA, AI robotics testing includes more than one layer of testing – both software and hardware as well as AI functionality and autonomous work. This should include, for example:

- Simulation and laboratory testing are must-do when developing a robot, especially when access to physical robots is limited or the robot is designed to work in a specific environment.

- Sensor calibration and disturbance testing to make sure all sensors function normally and check how well the robot interacts with its environment.

- Tests for navigation and path planning will verify the robot’s ability to avoid obstacles, create accurate maps, and localize itself.

- Autonomy testing ensures the robot’s decision-making algorithm is robust enough to cater to its adaptability and independent work.

- Human-robot interaction tests evaluate the adequacy and safety of a robot’s perception and response to human speech and behavior.

In addition, it’s always worth mentioning that following best development practices depending on programming languages, processes, and project constraints is the optimal strategy for software development and testing and appliesy equally to AI robotics.

Deployment and Monitoring

Same as testing, application deployment and monitoring are iterative processes, and continuous feedback from real-world usage is essential for refining and optimizing AI robotic systems over time. Regular updates, maintenance, and improvements based on monitoring insights contribute to the long-term success and reliability of the deployed systems.

Consider such aspects of your system important for solution deployment and monitoring:

- Ensure smooth integration of AI models with the Robotic System, paying attention to communication protocols, compatibility with existing hardware, and real-time constraints. Monitoring the system’s adaptability and ability to learn from ongoing experiences, including retraining models based on new data is important here.

- Validate the real-world performance of the AI models, confirming that they generalize well beyond the training data and simulations. Use performance monitoring metrics related to accuracy, response time, and overall system efficiency. Environmental monitoring of conditions that may affect the robotic system’s performance, such as changes in lighting, temperature, or humidity also helps improve the robot’s real-world performance. Predictive maintenance done through monitoring the condition of hardware components and scheduling maintenance or replacements proactively will definitely minimize downtime.

- Implement safety protocols and mechanisms to handle unexpected situations or errors. This includes emergency stop procedures, fail-safes, and monitoring for abnormal behavior. With anomaly detection method, you’ll be able to identify unusual behavior or deviations from expected patterns and address issues promptly. Failure analysis mechanisms, such as logging and recording information about unexpected behaviors, are useful in post-incident analysis.

- Implement measures to protect sensitive data collected or processed by the robotic system. Ensure compliance with privacy regulations and adopt encryption and secure communication protocols. Security monitoring is a good method to detect and respond to potential cybersecurity threats. This is crucial for protecting against unauthorized access or data breaches.

- Ensure your robotic system is compliant with relevant regulations and standards, especially in safety-critical applications. This may involve certification processes and adherence to industry-specific guidelines as well as ongoing monitoring for compliance with relevant standards, regulations, and ethical guidelines.

- Provide training and comprehensive documentation for end-users and operators to facilitate effective deployment and usage to ensure they understand how to interact with and supervise the AI robotic system. User feedback and interaction monitoring will help you understand their experiences with the robotic system. This feedback can be valuable for identifying areas of improvement.

- Implement continuous improvement mechanisms allowing for updates to AI models based on new data and experiences in the field. This includes over-the-air updates for deployed robotic systems and regular system assessment enabling you to timely address any changes in requirements. Resource utilization monitoring, including CPU usage, memory consumption, and power consumption, will be efficient in identifying and addressing any inefficiencies or resource bottlenecks in a timely manner.

Security, privacy, and regulatory issues

As active participants in business-critical, invasive, or potentially harmful operations and processes, AI-powered robots must be restricted by rigorous safety and security legislation, policies, and guidelines. So far, this aspect of advancements in AI and Robotics faces a range of problems to deal with:

- The need for effective privacy protection mechanisms in place.

- Lack of transparency in how data for model training is collected, processed, and used as well as obscurity in AI systems’ decision-making process that may impact human life.

- Lack of coordination and standardization in privacy laws and regulations across countries and regions.

OECD’s Artificial Intelligence Policy Observatory stresses that one of the obstacles hardest to overcome is the considerable gap between the pace of AI technology development and regulatory activity. Regulating tech advancements is about finding the balance between risks and opportunities which is very disputable, especially in the context of global international cooperation.

However, there’s some progress in ensuring data privacy and security on the regional and national levels, pressing software creators to inform users on how their data is used and ask for their consent to do so. Both AI and Robotics industries have to comply with the existing regulations related to data privacy:

- EU’s General Data Protection Regulation (GDPR) provides software users with the right to control how their personal data is collected, processed, and used by different applications.

- The California Consumer Privacy Act enables consumers to have more control over their data that businesses collect and process.

- US states, such as Virginia, Colorado, and Connecticut, actively implement their own legislation on regulating data processing in AI with a 20% pass rate (as compared to the federal-level pass rate of only 2%).

There is also a sector-specific approach to data security regulations:

- The world’s first comprehensive set of rules to govern AI is set to be the EU Artificial Intelligence Act. It is not in force yet, but it has passed the EU provisional agreement and should be implemented in 2025. The AI Act divides its rules on the level of risk an AI system has on society: the higher the risk, the stricter the rules. However, it has been heavily debated by the EU members as potentially discouraging the EU market for AI-focused businesses.

- As for robotics, in the EU it is regulated by the Machinery Regulation law, ensuring machines’ safety and increasing users’ trust in technology, reducing administrative burden and costs for manufacturing, setting up clear legal rules for manufacturing, and establishing safeguards against non-compliant machinery products.

- In the US, the Health Insurance Portability and Accountability Act (HIPAA) sets the standards for the protection of patient health information which is relevant for AI Robotics applications in the Healthcare domain.

- California’s Senate Bill-327 on The Security of Connected Devices aims to protect user data collected by IoT devices from unauthorized access, destruction, use, modification, or disclosure.

- The US doesn’t have any specific AI regulation so far, except for Biden’s Administration executive order driving the development of AI domain standards and ensuring the responsible and effective use of the technology by government agencies.

- The regulation of robotics in the US is overseen by several federal and industry-specific regulators: the General Industry standard and ISO robotics standards describe the safeguarding rules and other manufacturer requirements, the National Highway Traffic Safety Administration (NHTSA) regulates the automotive robotics applications, and the Federal Aviation Administration (FAA) regulates drone development.

Considering all of the above, it is key to account for your target market’s safety and security requirements for AI and Robotics development, as they may be looser or stricter across regions, countries, and even states.

Waverley’s Experience

Waverley’s engineering experts have been working on robotics projects years before this domain gained its present popularity. For our clients, this means a deep understanding of the foundational technologies and their evolution that translated into long-lasting and scalable products with a solid core. Today, we apply our expertise in Artificial Intelligence for robotics, delivering advanced solutions for a variety of industry domains.

Jibo

Consumer Robotics

Known to the general public as the first social robot for home, Jibo made a combination of impeccable mechanical engineering, smooth software development, and an insanely humane conception. The Waverley team partnered with Jibo’s creators, contributing our expertise in robotic system architecture design, mobile development, and back-end development, as well as rigorous quality assurance, support, and maintenance services.

As a result, Jibo can recognize his users’ faces and voices, interpret their requests, provide answers to them, even tell jokes, and “dance” in response to certain commands, thus creating deep emotional connections with humans. More importantly, compared to the competitors’ solutions, the robot’s distinctive feature is increased user data security and privacy, which doesn’t allow server and network providers to see any data collected by the robot from its interactions with users.

Shadow Dexterous Hand

R&D

This is ShadowRobot’s extremely sensitive and accurate articulated robot that closely resembles a human hand. It is equipped with over 100 sensors and numerous actuators allowing independent motion for each finger, customizable tactile sensing, postural stability, shock mitigation, and natural bending. Powered with AI, deep learning, and reinforcement learning specifically, it can be trained to master new moves and manipulate an endless nomenclature of objects in real-life settings.

The company leveraged Waverley’s know-how in the AI and Robotics domains to enhance the robot’s cloud infrastructure and DevOps with the AWS provider. As the client’s trusted nearshore software developers, our engineers worked on enhancing the security of user data critical for research activities and providing support to customers. Also, our team delivered improved fully managed software build service for the robot that saves the client’s costs on build and deployment operations.

Construction Robotic Fleet Controller

Construction

This is an ongoing project, involving Boston Dynamic’s Spot robots and Clearpath’s Huskies. Spot is a four-legged robot that can steadily walk around bumpy terrain, climb up the stairs or similar environments, and provide vision in hazardous environments. Husky is a ground vehicle platform with rugged construction and a high-torque drivetrain that can provide access to hard-to-reach environments. Both robots are unmanned and autonomous, making them a perfect inspection automation solution in such a place as a construction site.

The Waverley team of robotics and AI engineers is working on the monitoring and 3D modeling functionality for the construction site by making measurements and photos as well as providing additional useful features, such as connecting employees to video calls to help humans observe the construction process remotely.

Robo Perception

R&D

Waverley’s AI and robotics geeks are working on the development of a smart, legged robot advanced with computer vision technology. The four-legged robot is lightweight and equipped with an ML-powered high-resolution camera with depth vision and object detection capabilities. The simple and intuitive web interface for the robot system features robot controls for remote control, real-time video streaming with object detection, and the robot’s performance and hardware dashboard. It is designed for diverse automation applications, including industrial, commercial, and home.

Conclusion

This was an attempt at a general review of the current state of AI and Robotics fusion, its benefits for business, real-world applications, trends, and challenges. We can conclude that despite such challenges as ethical considerations, lack of standardization, and privacy concerns, smart robotic solutions have a multitude of useful applications at present and great potential for the future. Industries that benefit from AI in Robotics the most include logistics, manufacturing, welding, construction, transportation, and aerospace, with miltech, customer service, healthcare, and smart home gaining traction in this sphere.

It’s also important to mention here that AI-powered robotics development is a high-responsibility and costly process that requires a multi-faceted approach including the mastery of AI technologies, mechanical engineering, embedded engineering, security and privacy requirements, and industry-specific knowledge.

Waverley Software, with its decades of experience in software development, has the capacity to meet these rigid demands to help you integrate AI into your business with our robotics solutions. You can arrange a meeting with our consultants by using this contact page or the contact form below.

FAQ

What is AI in robotics, and why is it important?

AI in robotics is the application of data science and machine learning algorithms to enable robots to perceive the environment and act in a human-like manner. This makes them autonomous and helpful for people. For example, the application of computer vision helps robots detect and classify objects which provides opportunities for using them in hazardous environments. Or, powered with reinforcement learning, robots provide assistance to people in completing physically demanding tasks, such as dealing with heavy loads, working in extreme conditions, or support for people with limited abilities.

What are the key benefits of integrating AI into robotics?

The benefits of integrating AI into robotics include increased business efficiency, productivity, work accuracy, risk reduction, and cost efficiency. As the robots become autonomous, adaptable to the environment, and able to learn from past experience, they can substitute human workers in 24/7 operations, hazardous environments, and hard labor, or help them complete high-precision and heavy-load tasks. Social and customer-facing robots are designed to interact with people smoothly, building emotional connections and providing entertainment assistance in daily tasks. This way, businesses can increase quality, optimize the use of resources, provide better working conditions, and engage more customers.

How does AI enhance the efficiency of robotic systems?

AI technologies that are widely used in robotics include machine learning, deep learning, reinforcement learning, computer vision, generative AI, voice recognition, and speech synthesis. They make robotic systems autonomous and adaptable by enabling them to perceive their environment, process data collected from it in real time, make decisions independently and act accordingly without human supervision. Also, intelligent robots can learn from past experience, with or without human intervention, improving their performance and actions over time. Thus, AI increases robots’ efficiency and performance as compared to their less advanced counterparts.

How can companies integrate AI into their existing robotics systems?

In order to integrate AI into an existing robotic system, it is important to evaluate the robot’s hardware for computing capacity and compatibility with components with AI capabilities. Also, you need to understand your business needs and goals in order to identify the specific AI technologies, models, and data scope that will be required for your project. Your next step would be identifying the product features, scope of work, and optimal software tech stack to implement your vision. Waverley Software has the talent and competencies to guide you through the entire process of AI integration and development, from estimation and planning to implementation and support.

Interested in AI-enhanced robotics solutions?